Cross-covariance

In statistics, the term cross-covariance is sometimes used to refer to the covariance cov(X, Y) between two random vectors X and Y, in order to distinguish that concept from the "covariance" of a random vector X, which is understood to be the matrix of covariances between the scalar components of X.

In signal processing, the cross-covariance (or sometimes "cross-correlation") is a measure of similarity of two signals, commonly used to find features in an unknown signal by comparing it to a known one. It is a function of the relative time between the signals, is sometimes called the sliding dot product, and has applications in pattern recognition and cryptanalysis.

Contents |

Statistics

For random vectors, X and Y, each containing random elements whose expected value and variance exist, the cross-covariance matrix of X and Y is defined by

where μX and μY are vectors containing the expected values of X and Y. The vectors X and Y need not have the same dimension, and either might be a scalar value. Any element of the cross-covariance matrix is itself a "cross-covariance".

Signal processing

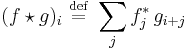

For discrete functions fi and gi the cross-covariance is defined as

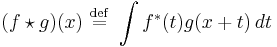

where the sum is over the appropriate values of the integer j and an asterisk indicates the complex conjugate. For continuous functions f (x) and g (x) the cross-covariance is defined as

where the integral is over the appropriate values of t.

The cross-covariance is similar in nature to the convolution of two functions.

Properties

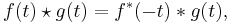

The cross-covariance of two signals is related to the convolution by:

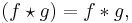

so that

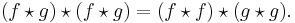

if either f or g is an even function. Also:

![\operatorname{cov}(X,Y)=\operatorname{E}[(X-\mu_X)(Y-\mu_Y)'],](/2012-wikipedia_en_all_nopic_01_2012/I/492844df8fadaa199f6ee39ee1c27212.png)